An approach for automated fact checking

A short while ago I took part in a hackathon from the Wiener Zeitung, where the theme was to tackle problems in the media space. Since I’m working on an RSS feed reader myself, I have a lot of ideas, but not the time to work on them. This hackathon was the perfect opportunity to validate if the fact-checking system I thought of some time ago could work. The result was better than expected.

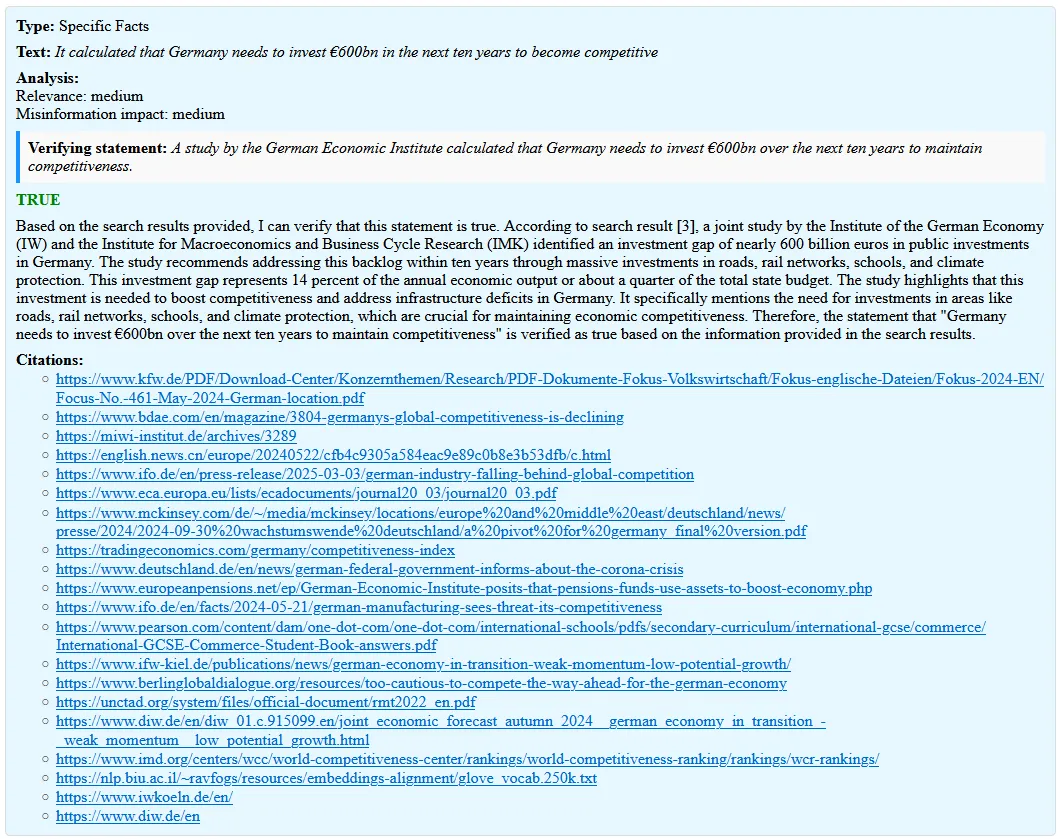

Screenshot

I wish I would’ve taken screenshots along the way so I could show example results of each step. But I didn’t, it was a hackathon with a time limit after all, so the textual description of the intermediate steps has to be enough.

But at least here’s a screenshot of the end result. It contains mistakes, I didn’t try to find a case where it works particularly well. And the implementation itself isn’t great, but more on that below.

The full screenshot is quite long, including it in the article would be too much, but you can find it here.

The approach

The main approach is very simple.

- Extract statements to verify

- Verify them

LLMs can do a lot of that, and with such a simple approach I feared that I’d be done 1h after the hackathon started. That fear was unfounded.

Extracting statements

Using LLMs

The first approach was to use an LLM (GPT-4o) to extract factual statements. As you can imagine this didn’t work so well. LLMs are not reliable enough (at least not yet) to do that properly. Sometimes the result contained 10 items, sometimes 25. Sometimes it split one statement containing two claims into two items, sometimes not.

It may be possible to improve the consistency of results with prompt engineering, but that would then make the system reliant on the model and its specific version. That’s a dependency I didn’t want to have, because at some point it should work with smaller models as well.

So I was looking for an alternative.

Splitting by sentence

The next approach was much simpler. Just split the text into sentences. This could be quite complex logic, considering quotes, periods in numbers, and so on, but for this first version I just split by period. In some cases this resulted in gibberish, but for the hackathon I ignored those.

The result is a list of sentences, which are statements that potentially need verification. Not every sentence does though. There’s no point to verify “When a german party triggers a Zählappell, or parliamentary roll call, it is serious business.“ for example.

Classifying sentences into fact types

Ignoring some sentences for verification would make for a better user experience (only relevant verification information is displayed) and reduce costs.

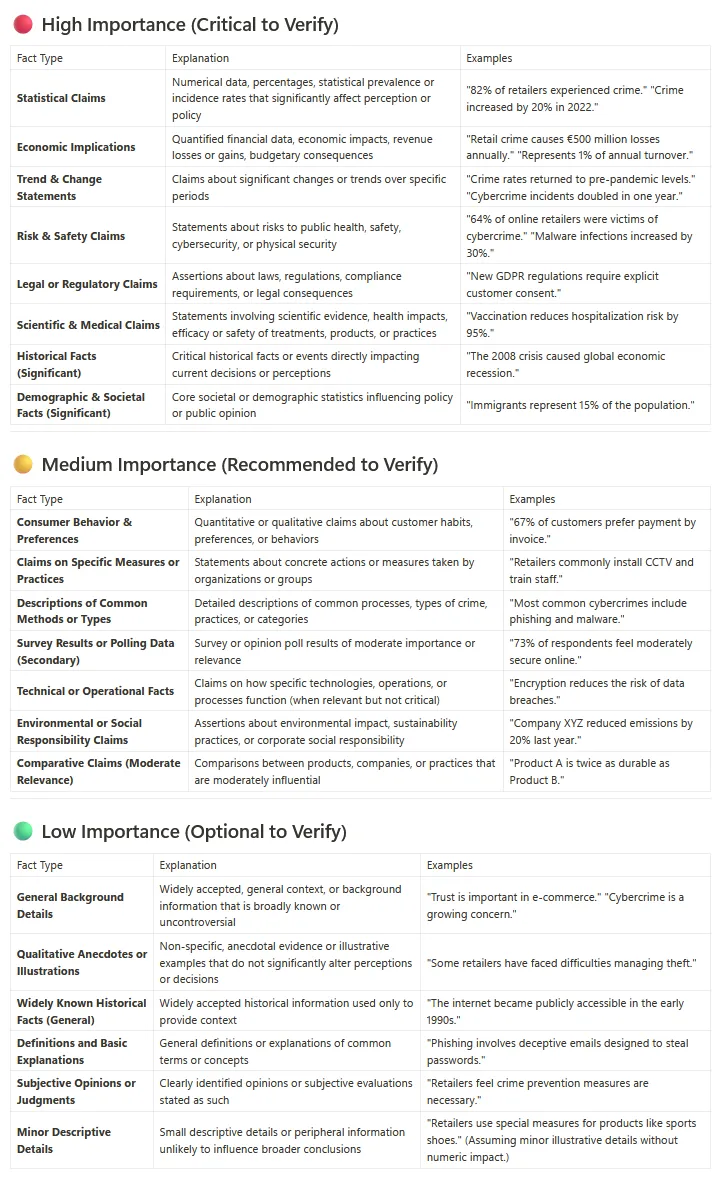

The first approach I tried was classifying each sentence in isolation, into a fact type. With the help of GPT-4.5 I created a list, categorized into how important it is to verify it.

Based on that I created a list of fact types that I want to verify.

Quantified data: anything with numbers

Trend and change statements

Risk or safety claims

Legal claims

Scientific claims

Significant historical facts

Significant societal factsNeither classification with the full list nor with the reduced list worked well. It was just too easy to classify each statement into one of the important types, even if it wasn’t important. It always made at least somewhat sense, so I couldn’t even say the LLM made a mistake. Which is a surefire sign that a different strategy is needed.

Classifying sentences in context

Next I tried to send the specific sentence and the whole article to the LLM, asking it if it’s important to verify this sentence in the context of the article.

I tried it with a yes/no and high/medium/low classification. This worked better, but still not really great.

Extended classification in context

There was a bit more experimentation along those lines, but I’m jumping to the end now. The approach that worked the best is first classifying each sentence into a category. The ones I used are

"Specific Facts",

"General Knowledge/Facts",

"Opinions/Subjective",

"Speculative/Hypothetical",

"Questions/Rhetoricals",

"Instructions/Imperatives",

"Quotations/Attributions",

"Ambiguous/Uncertain",

"Other"Only Specific Facts and Quotations/Attributions are further processed. All other categories basically act as a honeypot, so the LLM has other options and puts the sentence where it fits best, instead of put everything that loosely fits into one of those two.

This happens out of context. Each sentence is sent to the LLM without additional information.

The second step is to analyze the sentence in context, how important it is for the text and how big the impact is if it’s wrong. This is the prompt I used for it:

`Original text: "${articleText}"

Sentence to analyze: "${fact}"

Analyze this sentence from the text along the following dimensions:

- Relevance & Context: How central is the sentence to the text's main arguments or narrative?

- Impact of Misinformation: If the sentence is wrong, would it have significant consequences?`The LLM call also includes a tool which restricts the results for both dimensions to ”low” | “medium” | “high”.

If the result for any of those is low then it’s ignored for further verification.

Verification

Compared to statement extraction, the verification step is simple. I spent much more time on statement extraction. Before starting this little project I thought it’d be the other way around.

Basically, the verification step sends the statements to Perplexity and asks it to verify each one. It makes one request per statement.

This is the prompt:

`${fact}

Verify the above fact. Return true, false, or null (if not enough sources could be found to verify).`To check the result the code checks if true, false, or null is contained in the resulting string. It’s a naive approach and sometimes shows the result being all of those 3, because Perplexity responds with a sentence along the lines of “There is not enough information to verify the result being either true or false, so the correct response is null”. This part is not where I wanted to spend time, so I left it like that.

Apart from this small issue it worked surprisingly well. Well enough that I didn’t spend any time on improving it, apart from one specific case.

In a text each sentence is in the context of that text. Verifying sentences without that context is often impossible.

One particular example is the sentence “The draft defence budget for 2025 is €53bn.”. It’s an article about Germany, so while reading it it’s clear that Germany’s budget is meant. But that information is lost when sending it to Perplexity. For some reason it always assumed it’s Netherlands’ budget, which I thought was kinda funny.

The solution to that particular problem was converting each sentence into a self-contained statement before sending it to Perplexity for verification. Since every sentence is already analyzed in the context of the article, simply amending the prompt did the trick.

Below is the same prompt as I mentioned above, but with the statement Also convert the sentence into another one to make it a self-contained statement, to not require any additional information to understand and verify it. added at the end.

`Original text: "${articleText}"

Sentence to analyze: "${fact}"

Analyze this sentence from the text along the following dimensions:

- Relevance & Context: How central is the sentence to the text's main arguments or narrative?

- Impact of Misinformation: If the sentence is wrong, would it have significant consequences?

Also convert the sentence into another one to make it a self-contained statement, to not require any additional information to understand and verify it.`This results in the sentence “Germany’s draft defence budget for 2025 is €53bn.”, which contains all necessary information for verification.

This step considerably improved the accuracy of verification.

The code

This is a separate headline so people looking for the code can jump directly here. In short, I’m not including it because it’s atrocious.

The goal was validating the approach, not writing good code. For the sake of speed I let the code generate by Claude and iterated with it until I arrived at the final state. During that iteration it included some (UI) bugs that I couldn’t be bothered to fix. The typical 70% problem with AI-generated code.

You could probably use this article as prompt and get a similar result anyway.

Conclusion

I’m quite happy to say that the current version works for the most part. Better than I initially expected. However there are things to iron out.

The verification sometimes doesn’t find the right content to verify statements, even though it exists. Also it has difficulties with more complex statements. Additionally, it searches the internet without limiting the results to credible sources. I assume Perplexity does some of that, but not sure to what extent.

Similarly, statement extraction right now works by splitting sentences. This is ok for a prototype, but a sentence can contain multiple statements.

Another interesting case is quotes, which need double verification. Did the person really say what is quoted, and is what they said correct.

And arguably the most important improvement is to find a way to extract and categorize statements in a single pass, to bring the costs down. Currently the whole article is sent n times to the LLM (where n is the number of sentences). For long articles the costs can balloon quite fast.

Particularly this issue makes the approach in its current form infeasible for integration in a product. What could work though is a browser extension where users provide their own API key. The cost is predictable, because the content is known when the user is on a website. So the UI of the browser extension can show predicted costs to the user, which would make for a nice UX.